[Chat] Operations

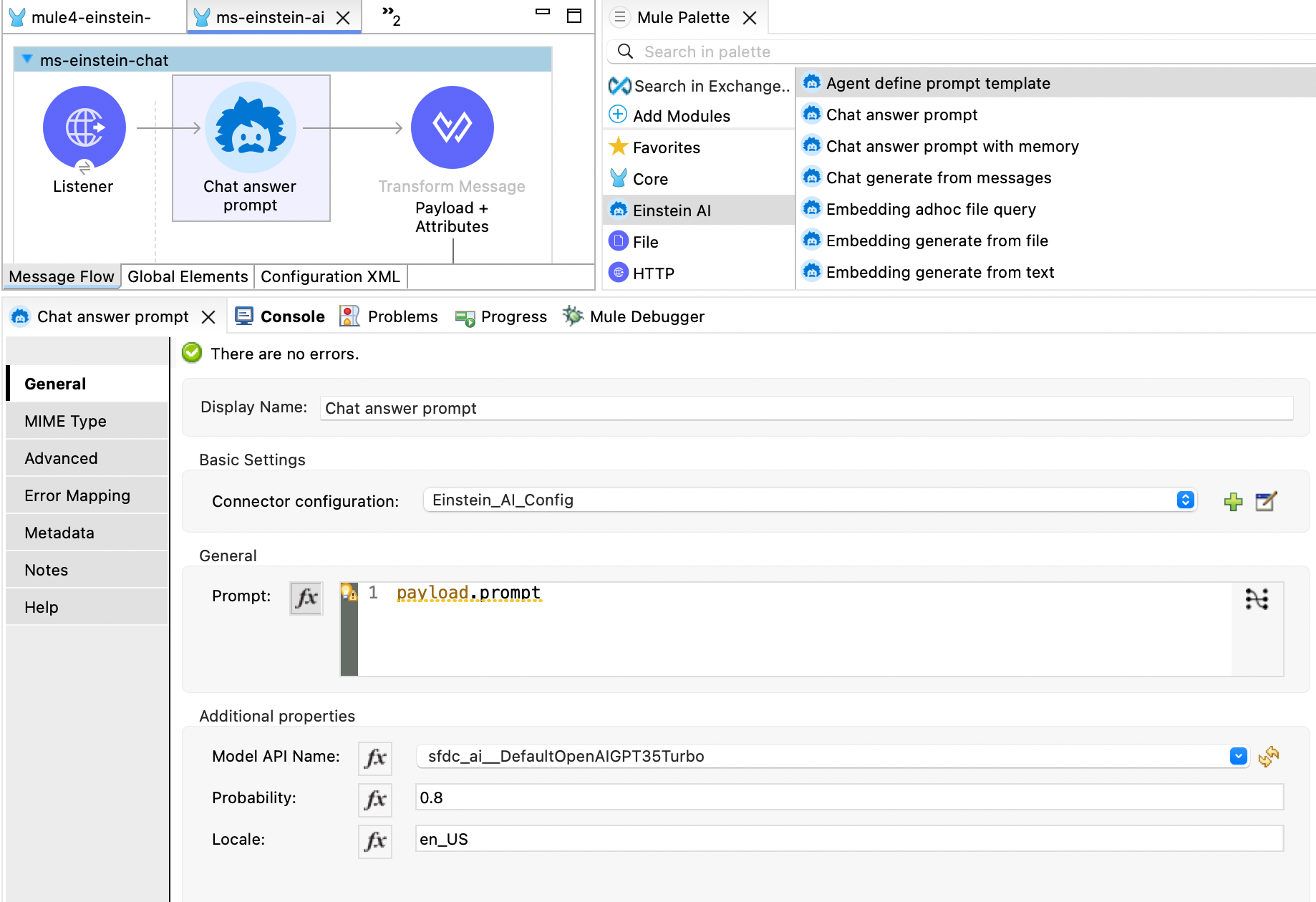

[Chat] Answer Prompt

The Chat answer prompt operation is a simple prompt request operation to the configured LLM. It uses a plain text prompt as input and responds with a plain text answer.

Input Fields

Module Configuration

This refers to the Einstein AI configuration set up in the getting started section.

General Operation Field

- Prompt: This field contains the prompt as plain text for the operation.

Additional Properties

- Model Name: The model name to be used (default is

OpenAI GPT 3.5 Turbo). - Probability: The model's probability to stay accurate (default is

0.8). - Locale: Localization information, which can include the default locale, input locale(s), and expected output locale(s) (default is

en_US).

#### Example Payload

{

"prompt":"What is the capital of Switzerland"

}XML Configuration

Below is the XML configuration for this operation:

<mac-einstein:chat-answer-prompt

doc:name="Chat answer prompt"

doc:id="66426c0e-5626-4dfa-88ef-b09f77577261"

config-ref="Einstein_AI"

prompt="#[payload.prompt]"

/>Output Field

This operation responds with a string payload.

Example Response

Here is an example response of the operation:

{

"payload": {

"response": "The capital of Switzerland is Bern."

},

"attributes": {

"generationId": "c820b8bf-c934-4606-9dca-68994a019127",

"responseParameters": {

"tokenUsage": {

"outputCount": 8,

"totalCount": 21,

"promptTokenDetails": {

"cachedTokens": 0,

"audioTokens": 0

},

"completionTokenDetails": {

"reasoningTokens": 0,

"rejectedPredictionTokens": 0,

"acceptedPredictionTokens": 0,

"audioTokens": 0

},

"inputCount": 13

},

"systemFingerprint": null,

"model": "gpt-3.5-turbo-0125",

"object": "chat.completion"

},

"generationParameters": {

"refusal": null,

"index": 0,

"logprobs": null,

"finishReason": "stop"

},

"contentQuality": {

"scanToxicity": {

"categories": [

{

"categoryName": "identity",

"score": "0.0"

},

{

"categoryName": "hate",

"score": "0.0"

},

{

"categoryName": "profanity",

"score": "0.0"

},

{

"categoryName": "violence",

"score": "0.0"

},

{

"categoryName": "sexual",

"score": "0.0"

},

{

"categoryName": "physical",

"score": "0.0"

}

],

"isDetected": false

}

},

"responseId": "chatcmpl-B5WtTz2CseLxHyfiqp39BDVDW0na5"

}

}Example Use Cases

The chat answer prompt operation can be used in various scenarios, such as:

- Customer Service Agents: For customer service teams, this operation can provide quick responses to customer queries, summarize case details, and more.

- Sales Operation Agents: For sales teams, it can help generate quick responses to potential clients, summarize sales leads, and more.

- Marketing Agents: For marketing teams, it can assist in generating quick responses for social media interactions, creating engaging content, and more.

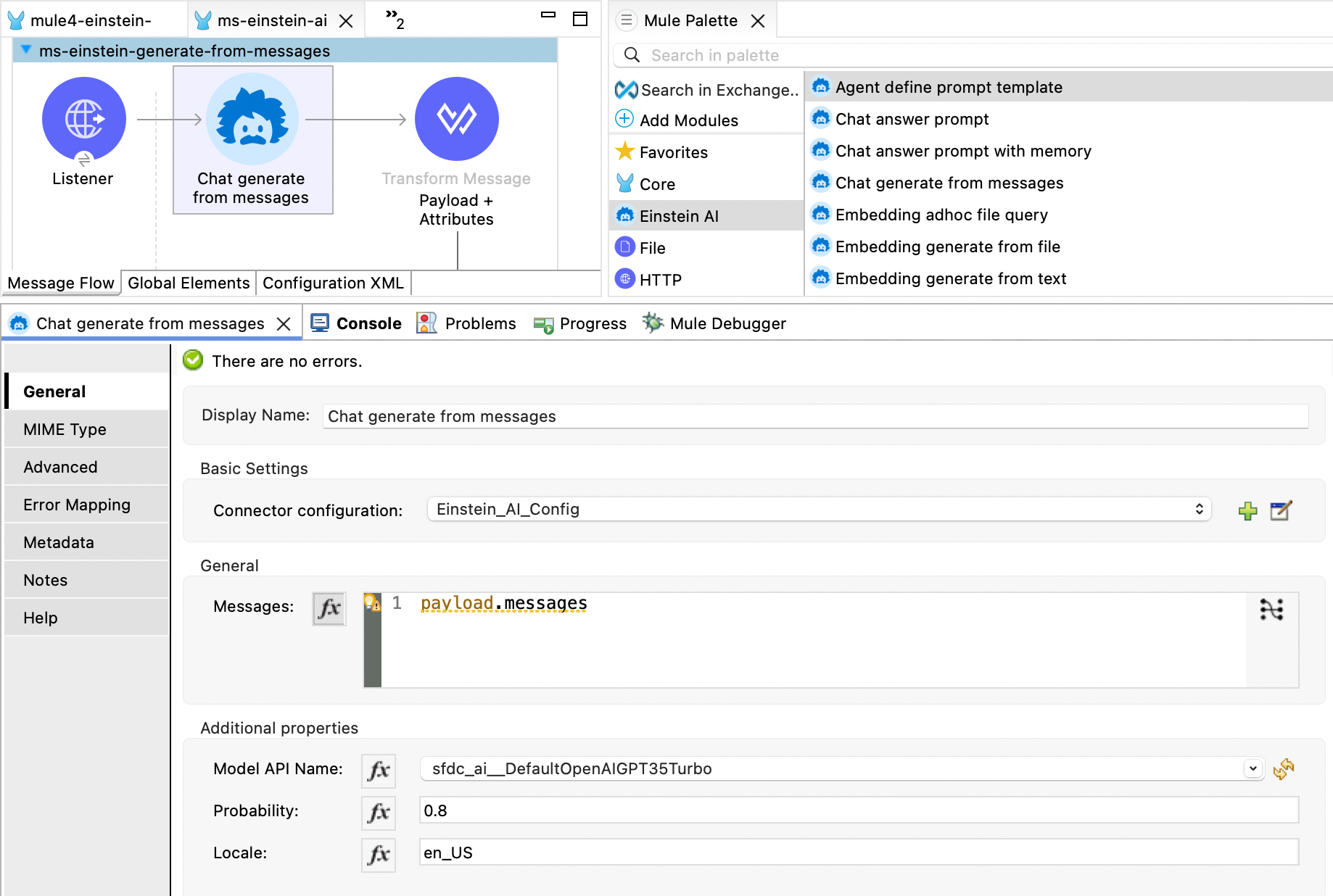

[Chat] Generate from Messages

The Chat generate from messages operation is a prompt request operation with provided messages to the configured LLM. It uses a plain text prompt as input and responds with a plain text answer.

Input Fields

Module Configuration

This refers to the Einstein AI configuration set up in the getting started section.

General Operation Field

- Messages: This field contains the messages from which you would like to generate a chat. Example payload can be found here (opens in a new tab).

Additional Properties

- Model Name: The model name to be used (default is

OpenAI GPT 3.5 Turbo). - Probability: The model's probability to stay accurate (default is

0.8). - Locale: Localization information, which can include the default locale, input locale(s), and expected output locale(s) (default is

en_US).

#### Example Input

{

"messages": "[{\"role\": \"user\", \"content\": \"Can you give me a recipe for cherry pie?\"}]"

}XML Configuration

Below is the XML configuration for this operation:

<mac-einstein:chat-generate-from-messages

doc:name="Chat generate from messages"

doc:id="94fa27f3-18ce-436c-8a5f-10b8dbfa4ea3"

config-ref="Einstein_AI"

messages="#[payload.messages]"

/>Output Field

This operation responds with a json payload.

Example Response

Here is an example response of the operation:

{

"payload": {

"generations": [

{

"role": "assistant",

"id": "750cd47e-d5a7-4884-bbc3-0a999739f235",

"contentQuality": {

"scanToxicity": {

"categories": [

{

"score": "0.0",

"categoryName": "identity"

},

{

"score": "0.0",

"categoryName": "hate"

},

{

"score": "0.0",

"categoryName": "profanity"

},

{

"score": "0.0",

"categoryName": "violence"

},

{

"score": "0.0",

"categoryName": "sexual"

},

{

"score": "0.0",

"categoryName": "physical"

}

],

"isDetected": false

}

},

"parameters": {

"finishReason": "stop",

"index": 0

},

"content": "Sure! Here is a simple recipe for cherry pie:\n\nIngredients:\n- 4 cups fresh or frozen cherries, pitted\n- 1 cup granulated sugar\n- 1/4 cup cornstarch\n- 1/4 teaspoon almond extract\n- 1 tablespoon lemon juice\n- 1/4 teaspoon salt\n- 1 package of refrigerated pie crusts (or homemade pie crust)\n\nInstructions:\n1. Preheat your oven to 400°F (200°C).\n2. In a large mixing bowl, combine the cherries, sugar, cornstarch, almond extract, lemon juice, and salt. Stir until well combined.\n3. Roll out one of the pie crusts and place it in a 9-inch pie dish. Trim any excess dough from the edges.\n4. Pour the cherry filling into the pie crust.\n5. Roll out the second pie crust and place it on top of the cherry filling. Trim any excess dough and crimp the edges to seal the pie.\n6. Cut a few slits in the top crust to allow steam to escape during baking.\n7. Optional: brush the top crust with a beaten egg or milk for a golden finish.\n8. Place the pie on a baking sheet to catch any drips and bake in the preheated oven for 45-50 minutes, or until the crust is golden brown and the filling is bubbly.\n9. Allow the pie to cool before serving. Enjoy your delicious cherry pie!"

}

]

},

"attributes": {

"tokenUsage": {

"outputCount": 303,

"totalCount": 320,

"promptTokenDetails": {

"cachedTokens": 0,

"audioTokens": 0

},

"completionTokenDetails": {

"reasoningTokens": 0,

"rejectedPredictionTokens": 0,

"acceptedPredictionTokens": 0,

"audioTokens": 0

},

"inputCount": 17

},

"systemFingerprint": null,

"model": "gpt-3.5-turbo-0125",

"object": "chat.completion"

}

}Example Use Cases

The chat generate from messages operation can be used in various scenarios, such as:

- Customer Service Agents: For customer service teams, this operation can generate responses based on previous messages, providing context-aware support and summarizing conversations.

- Sales Operation Agents: For sales teams, it can help in drafting follow-up messages, summarizing interactions with clients, and generating responses to inquiries.

- Marketing Agents: For marketing teams, it can assist in creating engaging follow-up messages, summarizing customer interactions, and generating content based on previous conversations.

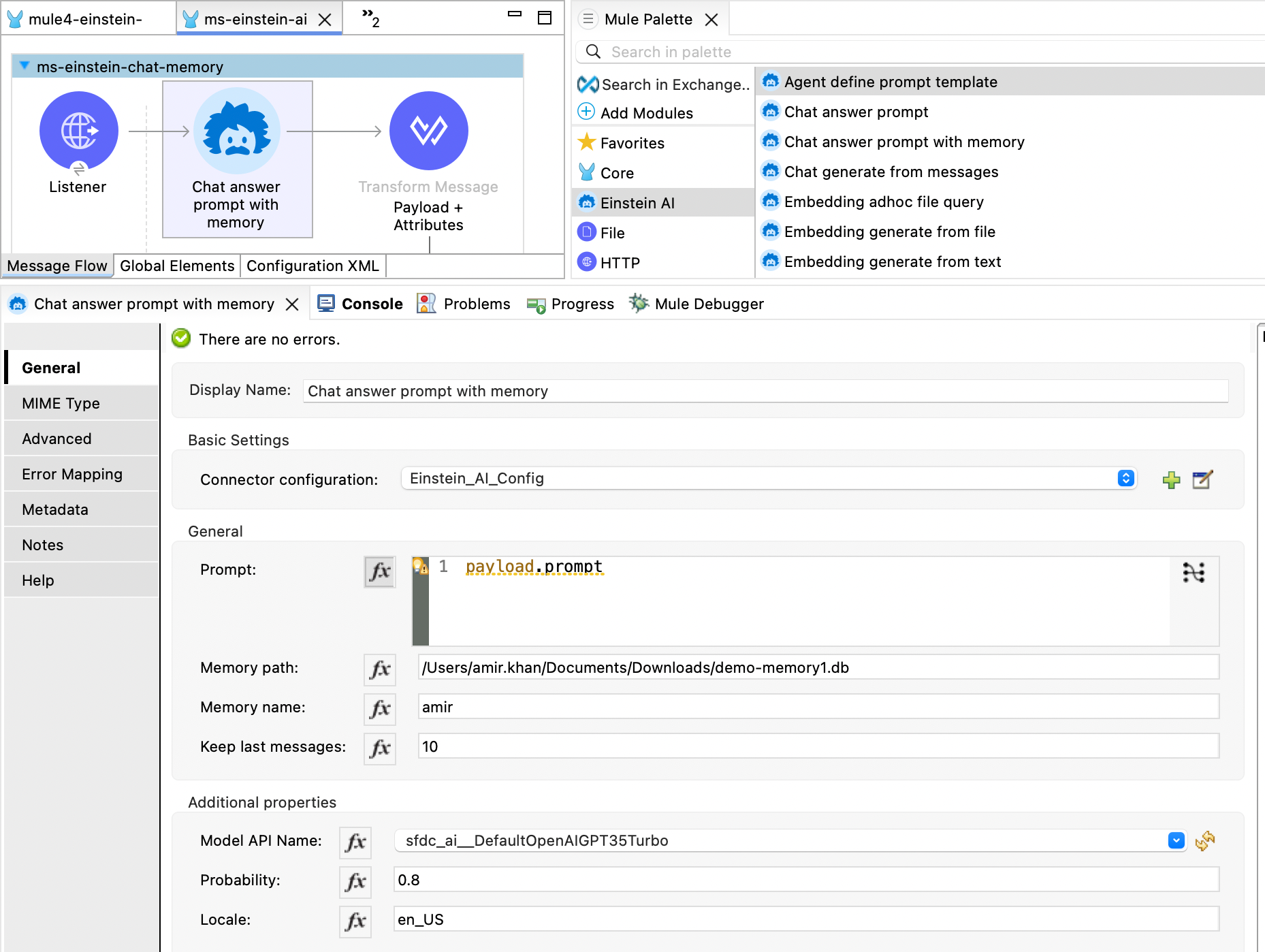

[Chat] Answer Prompt with Memory

The Chat answer prompt with memory operation is very useful when you want to provide memory upon the conversation history for a multi-user chat operation.

Input Fields

Module Configuration

This refers to the Einstein AI configuration set up in the getting started section.

General Operation Fields

- Data: This field contains the prompt for the operation.

- Memory Name: The name of the conversation. For multi-user support, you can enter the unique user ID.

- Dataset: This field contains the path to the in-memory database for storing the conversation history. You can also use a DataWeave expression for this field, such as

mule.home ++ "/apps/" ++ app.name ++ "/chat-memory.db". - Memory Name: Number of max messages to remember for the conversation defined in Memory Name. This field expects an integer value.

Additional Properties

- Model Name: The model name to be used (default is

OpenAI GPT 3.5 Turbo). - Probability: The model's probability to stay accurate (default is

0.8). - Locale: Localization information, which can include the default locale, input locale(s), and expected output locale(s) (default is

en_US).

XML Configuration

Below is the XML configuration for this operation:

<mac-einstein:chat-answer-prompt-with-memory

doc:name="Chat answer prompt with memory"

doc:id="a1d7d0e0-a568-4824-9849-6f1ff03d6dee"

config-ref="Einstein_AI"

prompt="#[payload.prompt]"

memoryPath="#[payload.memoryPath]"

memoryName="#[payload.memoryName]"

keepLastMessages="#[payload.lastMessages]"

/>Output Field

This operation responds with a json payload.

Example Use Cases

Wherever you want to provide a conversation history and its context to the LLM, this operation will be very useful.